Cambridge, Mass.

Educators have long held that the interactions between students and professors defy simple reduction. Yet in several areas of campus life, colleges are converting the student experience into numbers to crunch in the name of improving education.

Think of it as higher education meets Moneyball. In the movie, Oakland A's General Manager Billy Beane reinvents his struggling baseball team by analyzing statistics in new ways to predict player success. In education, college managers are doing something similar to forecast student success—in admissions, advising, teaching, and more.

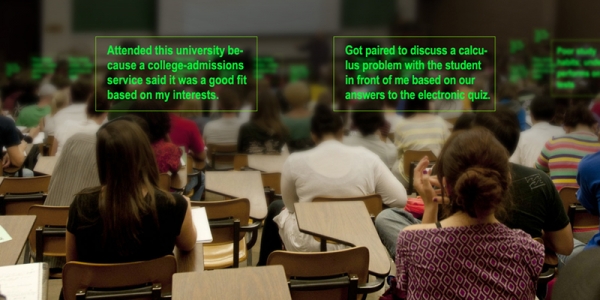

In one Harvard calculus class, even who you pair up with for group discussion is determined by a computer, one that tracks how well students are doing on the material.

The software records Ben Falloon's location in the back row and how he answers each practice problem. Come discussion time, it tries to stir up debate by matching students who gave different responses to the most recent question. For Mr. Falloon, the system, called Learning Catalytics, spits out this prompt: Please discuss your response with Alexis Smith (in front of you) and Emily Kraemer (to your left).

Getting data down to frontline students and instructors like this marks a shift for an industry that often focuses on pushing numbers up to accreditors and trustees, says Mark Milliron, formerly of the Bill & Melinda Gates Foundation, which backs college data-mining.

"I know more about my 11-year-old son's sixth-grade basketball team than the average college faculty member knows about their incoming class, in terms of key variables that are going to make them successful or not successful," he adds. "It is a sin that that is the case."

Today, half of students quit college before earning a credential. Proponents feel that making better use of data to inform decisions, known as "analytics," can help solve that problem while also improving teaching.

But skeptics worry that data-mining fosters a factory-line approach to education, one that wrings efficiency out of the existing system rather than reinventing it in a digital era.

One analytics tactic—monitoring student clicks in course-management systems—especially worries critics like Gardner Campbell, director of professional development and innovative initiatives at Virginia Tech. He sees these systems as sterile environments where students respond to instructor prompts rather than express creativity. Analytics projects that focus on such systems threaten to damage colleges much like high-stakes standardized testing harmed elementary and secondary schools, he argues.

"Counting clicks within a learning-management system runs the risk of bringing that kind of deadly standardization into higher education," Mr. Campbell says.

Educational data-mining also presents ethical questions. How much should students be told about the behind-the-scenes computer analysis that manipulates their educational experiences? And how far should colleges go in shaping those experiences based on data patterns?

Better Choices

When you buy a book on Amazon, you get a shopping experience tailored to your personal tastes. One piece of the college data puzzle is figuring out how to bring that customization to important educational decisions. Decisions like which courses to take, which major to pursue, and which college to enroll in.

Think of the problem in terms of a supermarket cereal aisle, says Tristan Denley, provost of Austin Peay State University, in Clarksville, Tenn. You find every choice known to man. But unless you've opened the box, you have very little information to judge what's inside. How do you pick one?

Part of the answer, he says, is technology that can look at people like you who have made such decisions in the past, and see whether those decisions worked out. In April, Austin Peay debuted software that recommends courses based on a student's major, academic record, and how similar students fared in that class.

Some professors fretted about students misinterpreting the Netflix-like tips as commands, but the Gates Foundation quickly ponied up $1-million to refine the software so other colleges can adopt it.

Now Austin Peay plans to expand on its work with a new tool that offers tips for making a more important decision: picking a major.

The feature, to be rolled out this spring, focuses on two problems: students who don't know which major to pick, and students who thought they knew, but ended up with a bad fit. A human adviser might be at a loss to suggest an alternate path, Mr. Denley says. But data could offer concrete possibilities.

For example, students often start climbing the ladder to become a nurse or a doctor, perhaps because they have relatives in those professions. Yet early on it's clear their grades won't carry them up to those goals. The data robot might suggest another health field. It might also suggest something totally different, like graphic design, because a student displays a pattern of grades similar to others who flourished in that direction, Mr. Denley says.

Similar ideas are flourishing in the world of admissions. One company getting buzz is ConnectEDU, sometimes described as an eHarmony for college matchmaking. Its founder, Craig Powell, dreams that students won't even have to apply to college "because an algorithm will have already told them and the schools where they would fit best," as The Atlantic reported recently.

Mr. Powell hopes to make that happen by plugging high schools and colleges in to an online platform that feels a lot like Facebook. And like Facebook, its news feed and customized recommendations hinge on vast amounts of information: over 250 data points for each student, including high-school academic records, standardized test scores, financial circumstances, career ambitions, and geographic locations. So far, 2.5 million high-school students have ConnectEDU profiles.

Say one of those students enjoys working with his or her hands and aspires to live a middle-American lifestyle. But the student has marginal grades and no college plans. The software might suggest a program at a local community college that qualifies the student for laying ground wire.

For colleges seeking prospective students, meanwhile, the algorithms get flipped. Privacy laws prevent Mr. Powell from giving kids' names and addresses to college admissions officers. But what he can offer is anonymous demographic information on potential applicants that might interest them, such as a first-generation African-American male who lives in Miami and makes straight A's in a rigorous math curriculum. When a college wants to single out a kid, it pings the student in the ConnectEDU system with a message that resembles a friend request. If the student accepts, his or her profile gets exposed, and the college can cultivate that student, Mr. Powell says.

"The colleges can be informing instead of direct-mailing and mass marketing," he says. "And you actually build relationships at the end of this, as opposed to working leads."

Classroom 'Clickstreams'

Another set of data-driven experiments involves how to teach those students once they start taking college classes, such as the one here at Harvard where the computer picks study partners.

That Learning Catalytics system grew out of technology developed in Eric Mazur's physics class. It marks the latest effort in the Harvard professor's long campaign to perfect the art of interactive teaching. Science instructors around the world have adopted his Peer Instruction method, and the technique helped popularize the classroom-response devices known as "clickers."

Mr. Mazur argues that his new software solves at least three problems. One, it selects student discussion groups. Two, it helps instructors manage the pace of classes by automatically figuring out how long to leave questions open so the vast majority of students will have enough time. And three, it pushes beyond the multiple-choice problems typically used with clickers, inviting students to submit open-ended responses, like sketching a function with a mouse or with their finger on the screen of an iPad.

"This is grounded on pedagogy; it's not just the technology," says Mr. Mazur, a gadget skeptic who feels technology has done "incredibly little to improve education."

The pedagogy that informs Learning Catalytics dates to 1991, when Mr. Mazur arrived at a painful revelation: his method of instruction, the lecture, was ineffective. The trigger came part of the way through a course at Harvard, Mr. Mazur recalled in a 2009 Science article headlined "Farewell, Lecture?" The professor decided to test students' comprehension of one of the first topics they had covered, the Laws of Newton. But he didn't give traditional problems. Instead, he asked the students basic conceptual questions, like comparing "forces that a heavy truck and a light car exert on one another when they collide."

They struggled. The reason: They memorized the information, rather than assimilating it.

So Mr. Mazur began teaching through questioning. In class, his students now work on conceptual problems. Then they pair off with peers who have different answers and try to convince each other that they're correct.

Those on the right track should prevail by force of reason, Mr. Mazur says. And they should be more likely to persuade classmates than the professor. That's because they still understand the obstacles in their peers' heads, whereas the material is so clear to Mr. Mazur, and has been for so long, that he doesn't get why somebody would have no clue.

But how do you group students? Ask them to turn to their neighbors, and chances are they're sitting next to a friend who won't be too helpful. Dysfunctional groups form. Instructing students to find classmates with a different answer doesn't always improve things; some just ignore the order.

So Mr. Mazur and his team set to work on their high-tech matchmaking venture. They asked students to fill out a 20-question survey about their study habits, attitudes toward science, and confidence in their abilities. The research group is now crunching these data to understand which questions are good indicators for pairing students. Already, though, they've found substantial improvement just by matching people with right and wrong answers, says Brian Lukoff, a Learning Catalytics co-founder and postdoctoral fellow who teaches calculus at Harvard.

On a recent Tuesday morning, Mr. Lukoff demonstrates the system in his math class. As students file in, they log on to the software from whatever device they carry, be it a laptop, tablet, or smartphone. Then they check in to their seats; the site helps out with a map similar to what you'd see buying an airplane ticket.

Today's lesson focuses on finding the area under a curve. Mr. Lukoff cues up a problem, which appears on students' screens, and gives them a few minutes to solve it.

Mr. Falloon, a sweatpants-wearing freshman from Chicago, chews on the eraser of his mechanical pencil. He scribbles in his notebook.

"If you don't know, just guess," says Mr. Lukoff, 29, who looks like a student, with jeans and sideburns.

Mr. Falloon, uncertain, selects an answer on his laptop: "C."

"OK, so now what I want you to do is talk to the person who your screen says to talk to, and try to convince them that you're right."

Mr. Falloon's laptop flashes the name of Emily Kraemer, a freshman from Florida. She thinks the answer is "A," which gets them arguing—exactly what the matchmaking algorithm intended. Meanwhile, Mr. Lukoff's screen displays a map of how everyone answered the question, data he can use to eavesdrop on specific conversations.

But, at least in this problem, the robot's pairing fails to spark calculus harmony. Their chat over, she still seems to believe the answer is "A," and he sticks with "C."

He's right.

"Oh man," Ms. Kraemer sighs.

Still, students express enthusiasm for Learning Catalytics' matchmaking, even if they appear a bit oblivious as to how the software selects partners.

"It's not someone you actually always interact with," says Alexis Smith, 18, a freshman from Alabama. "So it mixes it up."

"And then you know their name, too," says Mr. Falloon. "So if you forgot, it's less awkward."

Signals for Success

Classroom data-mining isn't just taking off at rich universities like Harvard. In a community-college sector racked by budget cuts, one Arizona institution sifts through data on student behavior in online courses to figure out who is at risk of underperforming or dropping out—and how to help.

By the eighth day of class, Rio Salado College predicts with 70-percent accuracy whether a student will score a C or better in a course.

That's possible because a Web course can be like a classroom with a camera rigged over every desk. The learning software logs students' moves, leaving a rich "clickstream" for data sleuths to manipulate.

Running the algorithms, officials found clusters of behaviors that helped predict success. Did a student log in to the course homepage? View the syllabus? Open an assessment? When did she turn in an assignment? How does his behavior compare with that of previous students?

The college translated that analysis into a warning system that places color-coded icons beside students' names in the course-management system. Shannon F. Corona, a seven-year online teaching veteran who is faculty chair of the physical-science department at Rio Salado, says the alerts improved her outreach. Before, she knew which students were doing great. She also knew which had tuned out. But she had a harder time pinpointing those in between, struggling yet still trying.

Now, when Ms. Corona logs in to her Chemistry 130 course, she takes students' temperature with a glance. The software flags them as green (likely to complete the course with a C or better), yellow (at risk of not earning a C), and red (highly unlikely to earn a C). If she hovers her mouse over the color, she gets more details. For one student flagged as yellow, for example, the system reports that he is doing an excellent job logging in to the class and a good job engaging with lessons, but falling behind when it comes to the pace of assignment submissions.

That might be the online equivalent of a student who shows up to class but struggles with the content, she says. Ms. Corona e-mails yellow-tagged students asking if they'd like her help or a tutor's.

"Especially for online students, they sometimes feel isolated," she says. "And a lot of instructors, just because of how the system is set up, you might miss it. You don't really know where they are, how they're doing, because they haven't asked you any questions."

But can you change a student's trajectory? The college has experimented with various intervention strategies, so far with mixed results. For example, early data showed students in general-education courses who log in on Day 1 of class succeed 21 percent more often than those who don't. So Rio Salado blasted welcome e-mails to students the night before courses began, encouraging them to log in.

The next step is a widespread rollout of the color-coded alerts, one that will put the technology in the hands of many more professors and students. The hope, Ms. Corona says, is that a yellow signal might prompt students to say to themselves: "Gosh, I'm only spending five hours a week in this course. Obviously students who have taken this course before me and were successful were spending more time. So maybe I need to adjust my schedule."

No one quite knows where education's analytics revolution will lead, but it's a safe bet that today's experiments will seem crude compared with what's coming.

Fast-forward a few years, and data-sharing choices could be part of starting college, like roommate assignments. Students may have a "buffet-like dashboard" that allows them to select which data to expose to their university, says George Siemens, an analytics expert at Canada's Athabasca University. That might include high-school courses, social-media profiles, library usage, demographic details.

Colleges will push for more and more info, Mr. Siemens speculates. "It'll be like, 'Oh, if you give us your socioeconomic data, we can target the best learning materials for you, or the best help resources,'" he says.

The result may be that if 100 students take introductory calculus, the computer will do much more than just predict those at risk of failing. It will customize a different learning experience for every student.